I did this interview with James Lorenzo a while back for a series that the video team over at Football Whispers have been working on called ‘The Science of Football’ which has figured prominent names of football data analytics like Omar Chaudhuri, David Sumpter and Chris Anderson.

I thought I’d use the video coming out as an opportunity to put in writing some thoughts and arguments which I would have wanted to make had I not gotten so flustered talking in front of a camera in a language that is not my first.

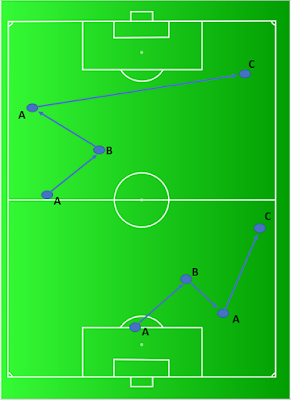

James asked me about passion in football, an element which in his words is many times “thrown at the stats community as something you can’t measure”, which forms the basis of many arguments to discredit the use of data analytics in football. My honest answer to the question of whether we can use data to measure passion on the pitch should have been “Uuuhhhm, maybe? Maybe if we clarify what exactly you mean by passion we can try to talk about it…”. Doesn’t make for a compelling viewing though. I can maybe indulge here though: maybe passion has something to do with trying hard, tracking back in defence even when you have over-committed in attack. If this is the case, then we potentially can: a passionate player will perform more of these actions, and if these actions somehow directly improve his team’s chances of winning matches (for example by defusing a dangerous counter-attack), then a performance model should pick up on this signal.

What about then if instead of directly improving a team’s performance in such a tangible way (stopping a dangerous situation for the opposition), passion shines through in much more innocuous situations. Perhaps the passionate player hounds his mark into the touchline and makes him stumble over himself and give away a throw-in on the halfway line. This throw-in will hardly raise any direct signal on the outcome of the match, right? But perhaps these sort of actions inspire the passionate player’s teammates and riles up their energy which is why he is important to the team, a coach might argue. Well, if that’s the case, then an intelligent modeller might still be able to pick up on this signal: if the effect is real, then teams that have many of these actions (opposition player hounded out of play) should win more matches. Notice that the model doesn’t even have to know that its picking up on passion, but it would still rate these players higher.

I’m obviously being overly simplistic with the examples above to prove the point that everything that happens on a pitch can in perhaps subtle but very real ways creep into signals that are detectable in data. There’s also the very real chance that I’m wide off the mark here. Perhaps coaches pick up on the signal of what they call passion in the dressing room, when a player is an excellent motivator for his team-mates. I’m pretty sure Opta hasn’t started coding events in the dressing room yet. Or perhaps the experienced coach can pick up on subtle signals of a player’s body language and know when he’s trying hard in a way that the data we have available simply can’t. After all, there are so many many fields in which the human brain is excellent at picking up signals, much better than data analytics. But as amazing as our brains are, their processing of information is also incredibly prone to mistakes and omissions that can throw us way off.

Take the example of the diagnosis of ‘female hysteria’ by British physicians of the 19th century. I’m using this overtly tongue-in-cheek example because doctors in 19th century society were surely by no standards ‘stupid’ people, but probably quite the opposite; they must have been the brightest people of their time. Nevertheless, no matter how intelligent these men must have been, the (in hindsight) obviously ridiculous diagnosis of female hysteria was freely dished around to any woman feeling a wide array of symptoms. The doctors were simply too accustomed to a term that had always been used in the medical circles of their time, and their minds couldn’t fathom to challenge it. They had seen it many times before, knew what is was about and its characteristics, and they knew that a woman was suffering from hysteria when they examined her. These intelligent men of the 19th century weren’t actively being stubborn or resisting to change, it is simply human nature to act in this way with so many biases that we wouldn’t even believe.

Now, the key point that I want you to understand is that, in my mind, this reflection in no way undermines or dismisses the knowledge of medicine of these 19th century physicians. Truth be told, if you were involved in a freak accident tomorrow and were bleeding badly and needed urgent medical attention by a passerby, who would you rather that passerby be: a 19th century physician or me reading off wikipedia smugly how wrong these doctors were in their diagnoses? Not me? Really???

Similarly, I in no way dismiss the organic knowledge about football of coaches and scouts. I love football, and I would go gaga if I ever got to meet Pep Guardiola or Arsene Wenger in person. I totally admire coaches and love to hear them speak about the game. So trust me, my point is nowhere near what some skeptics think people like me who do football analytics think: “these dumb coaches know nothing about football, they’re ignorant cavemen”. My point is simply that, if you were in that freak accident, what you really want for a passerby is a 21st century doctor who has gone to medical school and embraced the sophistication and advancement of his trade, and is open and willing to get medical knowledge from all sources which can provide it, be it from his super experienced mentor who was a doctor back in the 19th century, or from the latest cutting edge medical studies. You want a doctor who understands that medicine is the subject of study of a wide range of domains of knowledge, and who can pick and choose how to combine these different sources into making sure you survive.

I think the “numbers can’t understand passion” argument is a lazy one made by people who simply don’t have any sort of grasp of what data science actually is. I also think it is a dangerous one to make because for the younger generations of coaches coming through now, it will be easy to cling on to that meaningless phrase as an excuse to disregard and not make an effort to understand something which they perhaps find challenging, and they will inevitably be left behind as football undergoes this new wave of sophistication.

I thought I’d use the video coming out as an opportunity to put in writing some thoughts and arguments which I would have wanted to make had I not gotten so flustered talking in front of a camera in a language that is not my first.

James asked me about passion in football, an element which in his words is many times “thrown at the stats community as something you can’t measure”, which forms the basis of many arguments to discredit the use of data analytics in football. My honest answer to the question of whether we can use data to measure passion on the pitch should have been “Uuuhhhm, maybe? Maybe if we clarify what exactly you mean by passion we can try to talk about it…”. Doesn’t make for a compelling viewing though. I can maybe indulge here though: maybe passion has something to do with trying hard, tracking back in defence even when you have over-committed in attack. If this is the case, then we potentially can: a passionate player will perform more of these actions, and if these actions somehow directly improve his team’s chances of winning matches (for example by defusing a dangerous counter-attack), then a performance model should pick up on this signal.

What about then if instead of directly improving a team’s performance in such a tangible way (stopping a dangerous situation for the opposition), passion shines through in much more innocuous situations. Perhaps the passionate player hounds his mark into the touchline and makes him stumble over himself and give away a throw-in on the halfway line. This throw-in will hardly raise any direct signal on the outcome of the match, right? But perhaps these sort of actions inspire the passionate player’s teammates and riles up their energy which is why he is important to the team, a coach might argue. Well, if that’s the case, then an intelligent modeller might still be able to pick up on this signal: if the effect is real, then teams that have many of these actions (opposition player hounded out of play) should win more matches. Notice that the model doesn’t even have to know that its picking up on passion, but it would still rate these players higher.

I’m obviously being overly simplistic with the examples above to prove the point that everything that happens on a pitch can in perhaps subtle but very real ways creep into signals that are detectable in data. There’s also the very real chance that I’m wide off the mark here. Perhaps coaches pick up on the signal of what they call passion in the dressing room, when a player is an excellent motivator for his team-mates. I’m pretty sure Opta hasn’t started coding events in the dressing room yet. Or perhaps the experienced coach can pick up on subtle signals of a player’s body language and know when he’s trying hard in a way that the data we have available simply can’t. After all, there are so many many fields in which the human brain is excellent at picking up signals, much better than data analytics. But as amazing as our brains are, their processing of information is also incredibly prone to mistakes and omissions that can throw us way off.

Take the example of the diagnosis of ‘female hysteria’ by British physicians of the 19th century. I’m using this overtly tongue-in-cheek example because doctors in 19th century society were surely by no standards ‘stupid’ people, but probably quite the opposite; they must have been the brightest people of their time. Nevertheless, no matter how intelligent these men must have been, the (in hindsight) obviously ridiculous diagnosis of female hysteria was freely dished around to any woman feeling a wide array of symptoms. The doctors were simply too accustomed to a term that had always been used in the medical circles of their time, and their minds couldn’t fathom to challenge it. They had seen it many times before, knew what is was about and its characteristics, and they knew that a woman was suffering from hysteria when they examined her. These intelligent men of the 19th century weren’t actively being stubborn or resisting to change, it is simply human nature to act in this way with so many biases that we wouldn’t even believe.

Now, the key point that I want you to understand is that, in my mind, this reflection in no way undermines or dismisses the knowledge of medicine of these 19th century physicians. Truth be told, if you were involved in a freak accident tomorrow and were bleeding badly and needed urgent medical attention by a passerby, who would you rather that passerby be: a 19th century physician or me reading off wikipedia smugly how wrong these doctors were in their diagnoses? Not me? Really???

Similarly, I in no way dismiss the organic knowledge about football of coaches and scouts. I love football, and I would go gaga if I ever got to meet Pep Guardiola or Arsene Wenger in person. I totally admire coaches and love to hear them speak about the game. So trust me, my point is nowhere near what some skeptics think people like me who do football analytics think: “these dumb coaches know nothing about football, they’re ignorant cavemen”. My point is simply that, if you were in that freak accident, what you really want for a passerby is a 21st century doctor who has gone to medical school and embraced the sophistication and advancement of his trade, and is open and willing to get medical knowledge from all sources which can provide it, be it from his super experienced mentor who was a doctor back in the 19th century, or from the latest cutting edge medical studies. You want a doctor who understands that medicine is the subject of study of a wide range of domains of knowledge, and who can pick and choose how to combine these different sources into making sure you survive.

I think the “numbers can’t understand passion” argument is a lazy one made by people who simply don’t have any sort of grasp of what data science actually is. I also think it is a dangerous one to make because for the younger generations of coaches coming through now, it will be easy to cling on to that meaningless phrase as an excuse to disregard and not make an effort to understand something which they perhaps find challenging, and they will inevitably be left behind as football undergoes this new wave of sophistication.